Now that Gopher has taught us all about Queues, let us take a look at a few common implementations of Queue which helps us with many a daily tasks.

Web Browser History

All web browsers maintain a record of the websites that a user visits so that we can load the website a lot faster the next time the user decides to visit the same.

Have you ever wondered why the browser never run out of space inspite of recording a history of all our web activities?

This is because not only does the browser record all the web activity in a Queue but also has an eviction strategy in place to clear its contents when the time comes.

Most web browsers have a 30 day eviction period, which means the first entry into the browser history Queue would be Dequeued at the end of 30 days.

In this way we continuously Dequeue the contents of the Queue after it exceeds the specified time fame and hence the browser never really runs out of space.Pretty cool right?

Message Queue

Before we understand what a Message Queue is, let us familiarise ourselves with some terminologies.

Message: A message is the data that is transported between two parties.

Messenger: A messenger can be considered as the channel through which Messages are transmitted.

Producer/Sender : A Producer/Sender can be considered as the originator of the Message which is propagated through the Messenger.

Consumer/Receiver: A Consumer/Receiver is responsible for connecting to the Messenger and downloading the Messages sent by a Producer/Sender.

In short, a Producer reaches out to the Messenger(Queue) and sends a Message, the Producer then gets on to doing other things as he/she wont hear back from the Messenger anytime soon.

On the other side, a Consumer connects to the Messenger(Queue) to receive all the Messages that was send directly to the Consumer.

The Consumer can then process this information and reply back via the same medium, only, now the roles would be reversed.

Let us now relook at the above definitions from a Computer Science Perspective.

Message: A message is essentially a byte array with some headers at the top which is exchanged between the Producer & Consumer.The headers essentially contains information regarding the origin and some description about the data packet being transmitted.

Messenger: A messenger refers to the Message Queue that servers as the communication channel/Storage in the event the Consumer is offline.

Let us go ahead and summarise this.

- The basic concept behind a Message Queue is simple, there are applications called Producers that creates data packets called Messages and delivers them to the Message Queue.

- Another application, called a Consumer, connects to the Message Queue and downloads these Messages so that they can be processed.

- The Messages maybe stored in the Queue for some time before the Consumer retrieves them.

- Once processed the the information will be transferred back to the source, only, now the roles become reversed.

Due to the decoupled way in which the messages are transmitted and because the Producer does not expect a reply immediately we can consider this as an Asynchronous Communication Protocol.

Some of the common Message Queues are: RabbitMQ, AWS SQS etc.

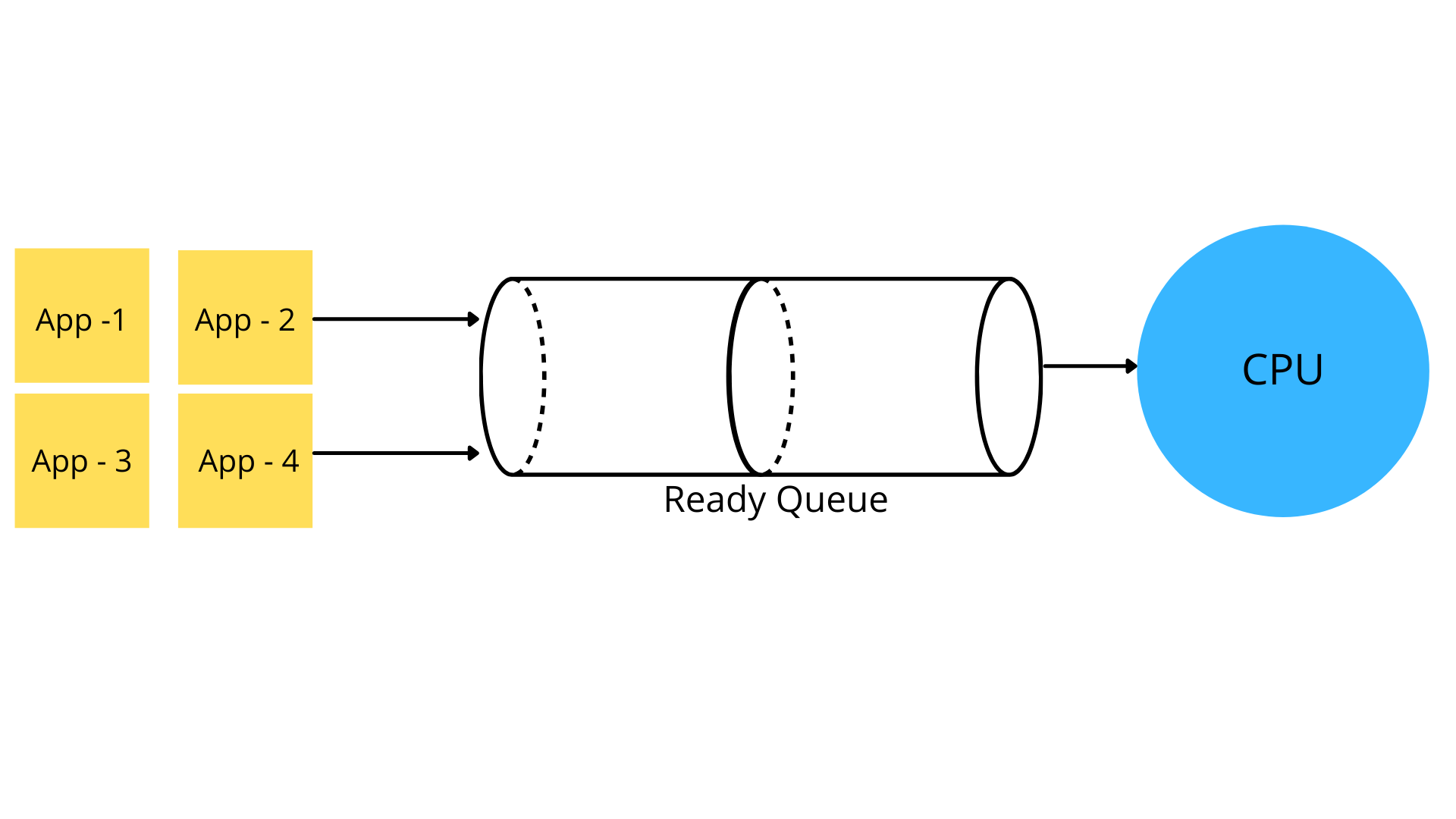

CPU Scheduling

Every Compute Engine has a CPU and memory(HDD) which has to be shared between all the applications that are installed and running on it. Since all these different processes/applications are competing with each other to gain access to these shared resources.

In such a scenario, how would one ensure that these resource allocation happens efficiently and effectively?

CPU Scheduling is the process of determining which process will occupy the CPU for execution of its tasks.

The main reason for having a CPU scheduling system is to make sure that every time a CPU remains idle, the OS has a process available in the ready queue for execution.The ownership of deciding which thread will execute at what point of time is determined by a CPU scheduler.

The commonly used CPU scheduling algorithms are:

- First Come First Serve(FCFS)

- Shortest Job First Scheduling

- Shortest Remaining Time

- Priority Scheduling

- Round Robin Scheduling

- Multilevel Queue Scheduling

Lets us understand these algorithms a little more in detail.

First Come First Serve (FCFS) - In this method, whichever process requests access to the CPU first gets the CPU allocated to it.

Shortest Job First (SJF) - In this method, the process with the shortest execution time gets the CPU allocated to it.

Shortest Remaining Time (SRT) - In this method, the CPU will be allocated to a process which is closest to completion.

Priority - In this method, the CPU will be allocated to a process based on the priority value assigned to it at process initialisation.

Round Robin (RR) - This algorithm works on the principle that every process gets an equal share of the CPU allocation time.This method helps for a starvation free execution of processes.

Multiple Level Queues - In this method, the ready queue is split into various separate queues and the processes are assigned to them based on different properties of the process like priority, size of memory needed etc.

With this I hope you have a fair understanding of Queue Data Structure and it's practical uses.Now we need to follow Gopher on his next adventure to learn about our next data structure.

P.S: The next data structure has a lot to do with the game of Treasure Hunt.Stay Tuned!

Join the conversation