How does a Kubernetes cluster look like? Sure there are nodes, but how does it work? Understand all the major components of the Kubernetes system in this post.

On a whim one day (this was before I had taken the Kubernetes bait), I googled "Is Kubernetes difficult to learn?". It was one of those rare days where I was unusually motivated to learn something new.

Google said, "Yes girl, Kubernetes is difficult". Now I'd very much like to say "I was unfazed and determined....". You know the usual motivational "zero to hero" story. But let's get real here. I decided to indefinitely postpone learning Kubernetes. Why? Because I am lazy. (Don't judge me. You'd do it too!)

But when the time did come for me to learn about Kubernetes, (And this time I couldn't postpone) I admit, it was a bit difficult. Scratch that. It was difficult. There were a lot of concepts I had to wrap my head around in a short amount of time.

One thing I realized is, no matter what, get the basics in place. And to ensure you don't have the same fate as I did, We're here to simplify things for you in this series. Okay enough talk, let's get down to business

In the last post, we talked about Pods. This time, we pick up another key topic. The Kubernetes architecture.

Recap

So we've already told you about the Kubernetes Master-Slave Concept. One node controls all the other nodes. However villainous it may sound, it is how things work in Kubernetes. But we didn't tell you HOW this whole master-slave thing works. I mean, what does this master node do? We'll tell you...

The Big Picture

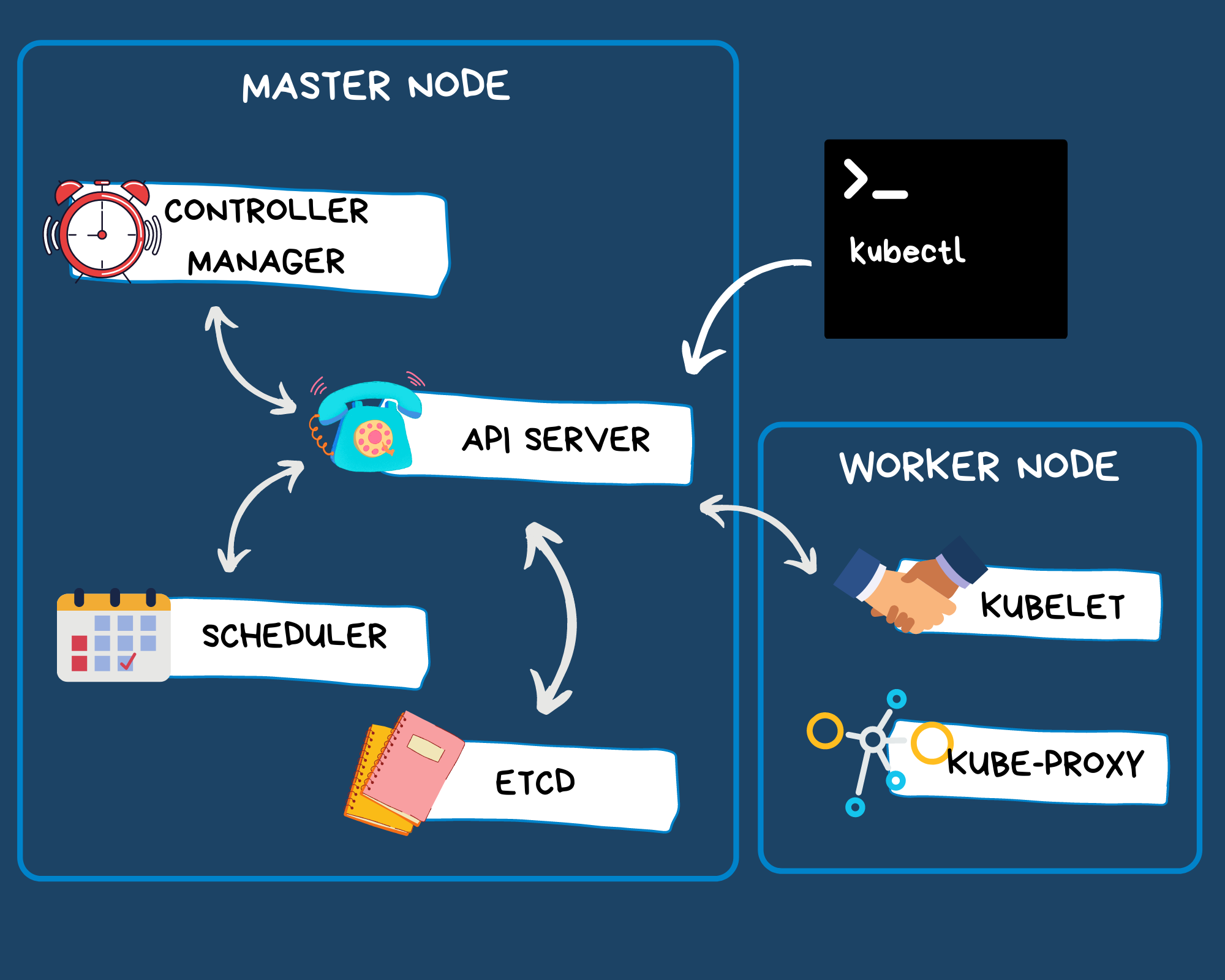

If you have to show a typical master-node component, this is what it may look like

If you are wondering what all these fancy terms mean, then we're going to go through each one at a time.

Let's target the master node first.....

The Control Plane Team

Now your master node, codenamed the "CONTROL PLANE", is where most of the important tasks related to cluster management and administration take place. Largely, there are four main components:

- The API server

- The Scheduler

- The Controller Manager

And..... - etcd

We'll talk about each of these...

API Server

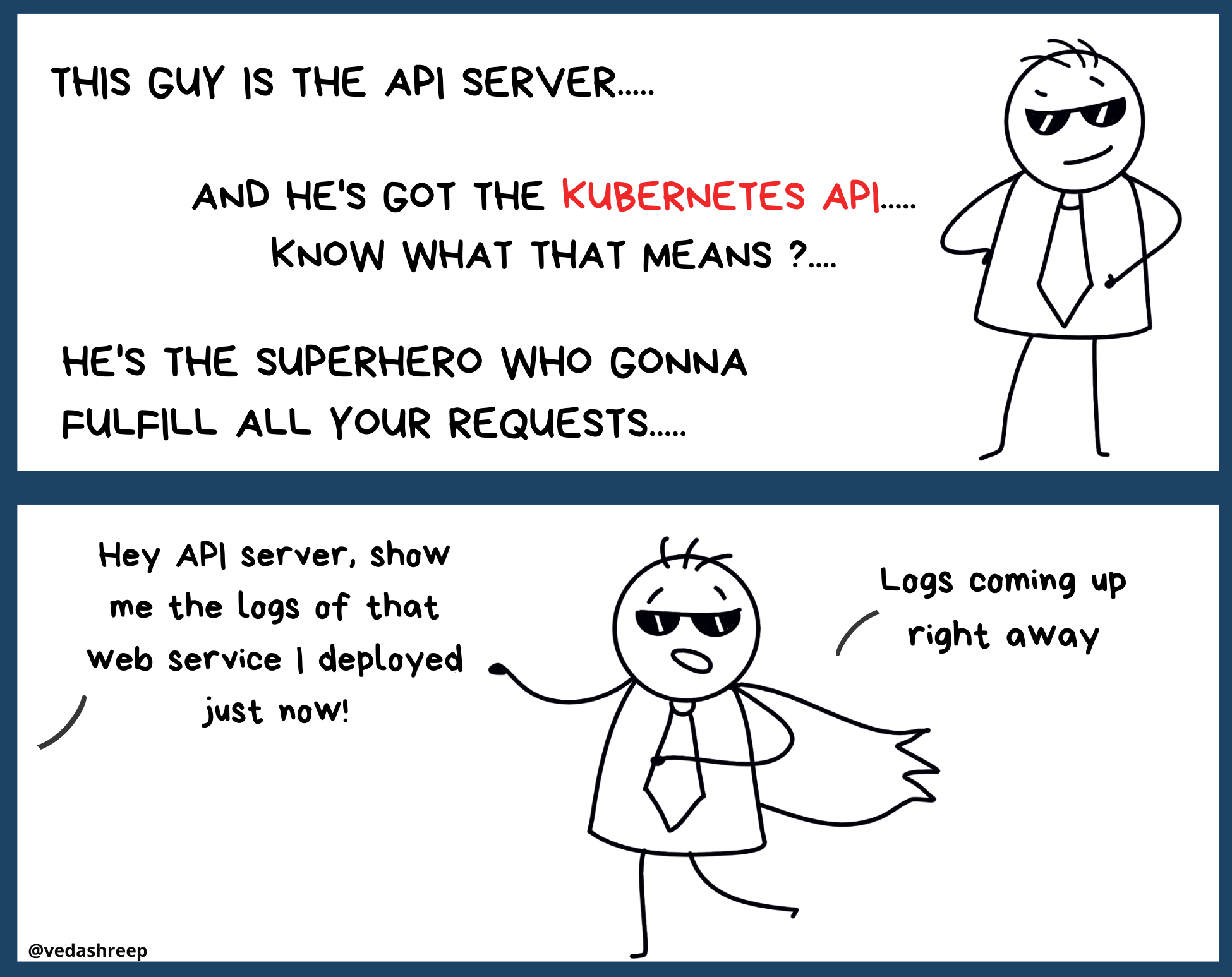

Number one. The API Server. Probably the most important one. The "face" of Kubernetes. Take that literally because if you wish to interact with a Kubernetes cluster, you'll probably have to go through the API server.

Basically, to perform any sort of action on the cluster, you always talk to the API server via the Kubernetes API. Using kubectl, REST or any of the Kubernetes Client libraries? All of them are calling the Kubernetes API and interacting with the API server behind the scenes.

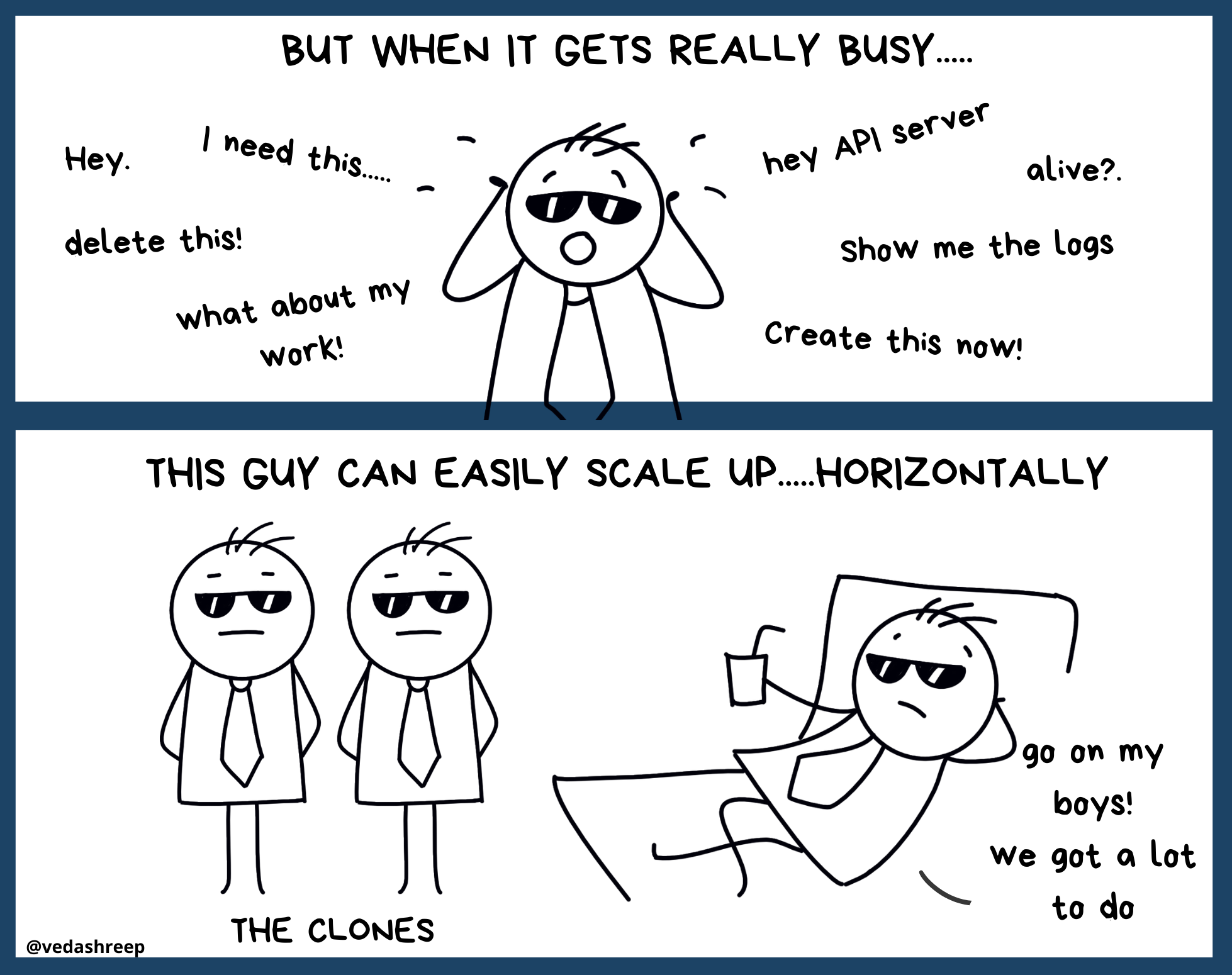

Now a cool feature about the API server is that it can scale horizontally. Meaning that when there's a surge in requests coming in, the API server is capable of creating "clones" or replicas of itself to manage the load.

Scheduler

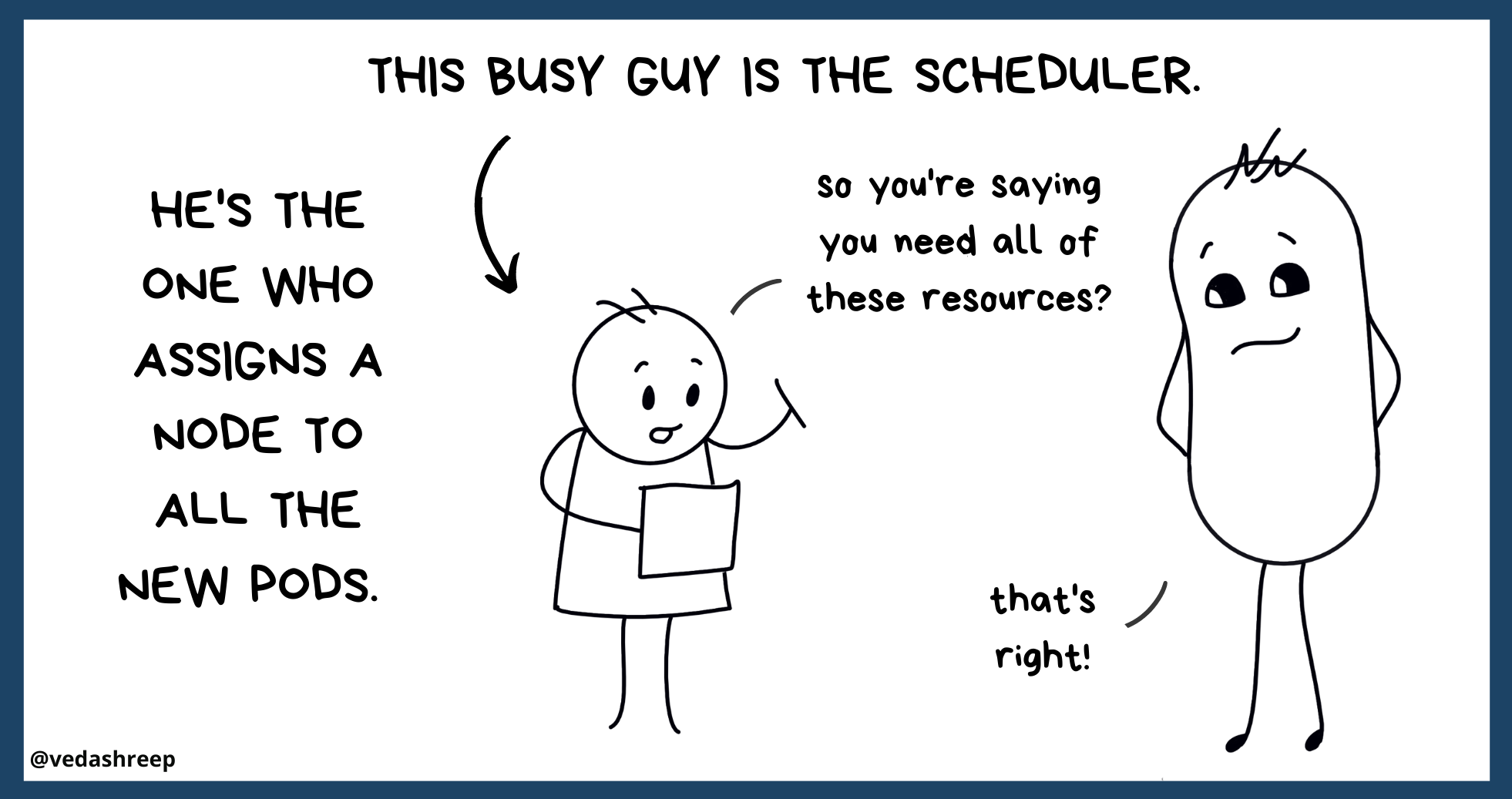

Next up: The Scheduler who schedules.

Schedules what?

Pods.

Schedules where?

Nodes.

Simple? Let's elaborate.

When a new pod is created, it's stuck in the "Pending" state until it's assigned a node to run on. (Read our last post on Pods. You'll get it). And this assignment is done by the Scheduler.

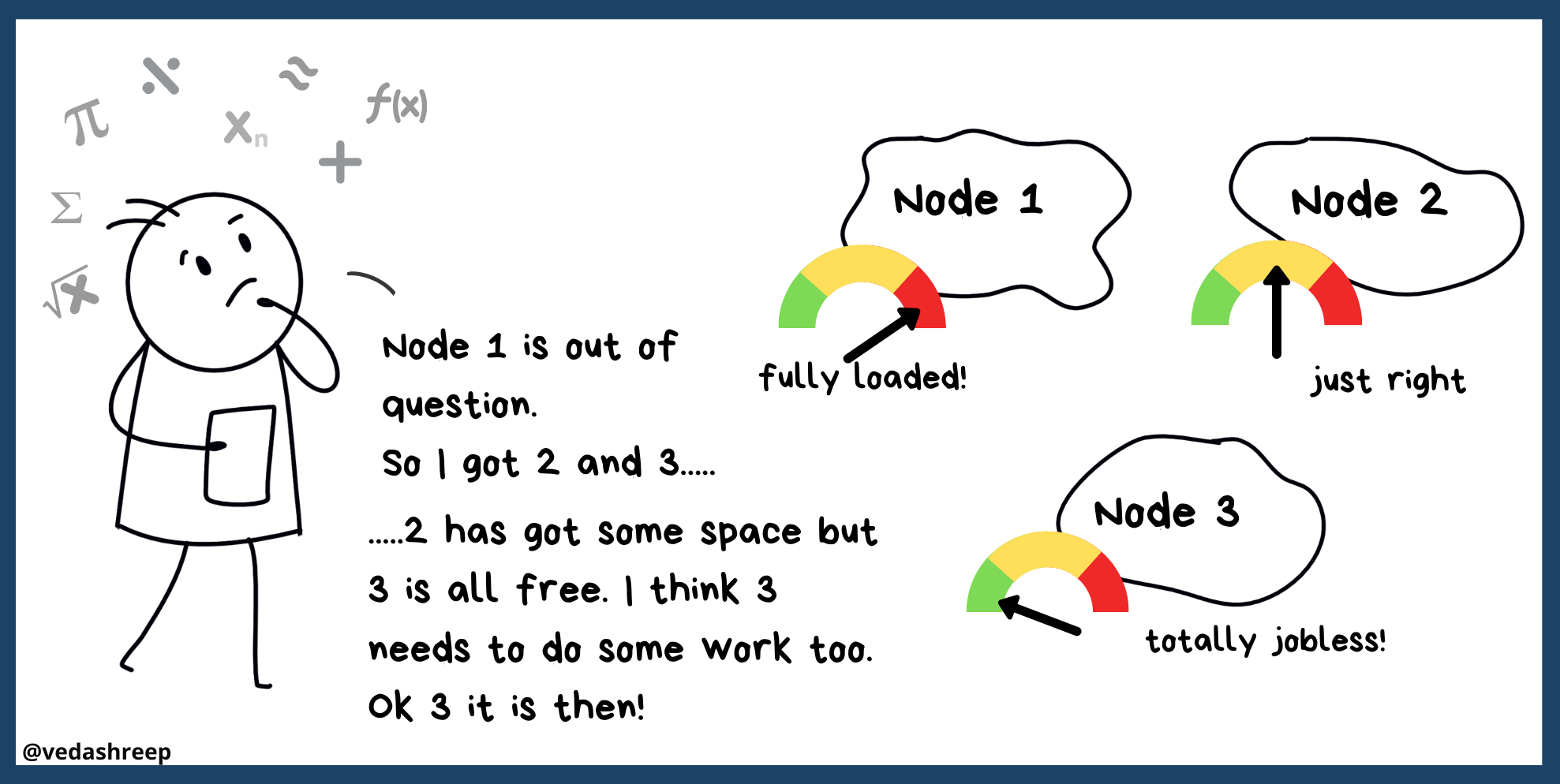

Every pod requires some resources to run. Memory, CPU, hardware...the usual. Now, it's up to the scheduler to decide which node fits the pod's requirements. Based on this, the scheduler does two actions

- Selects candidate nodes for the pod

- Finalizes on one node where the pod will run.

Controller Manager

You first need to know what a Controller is.

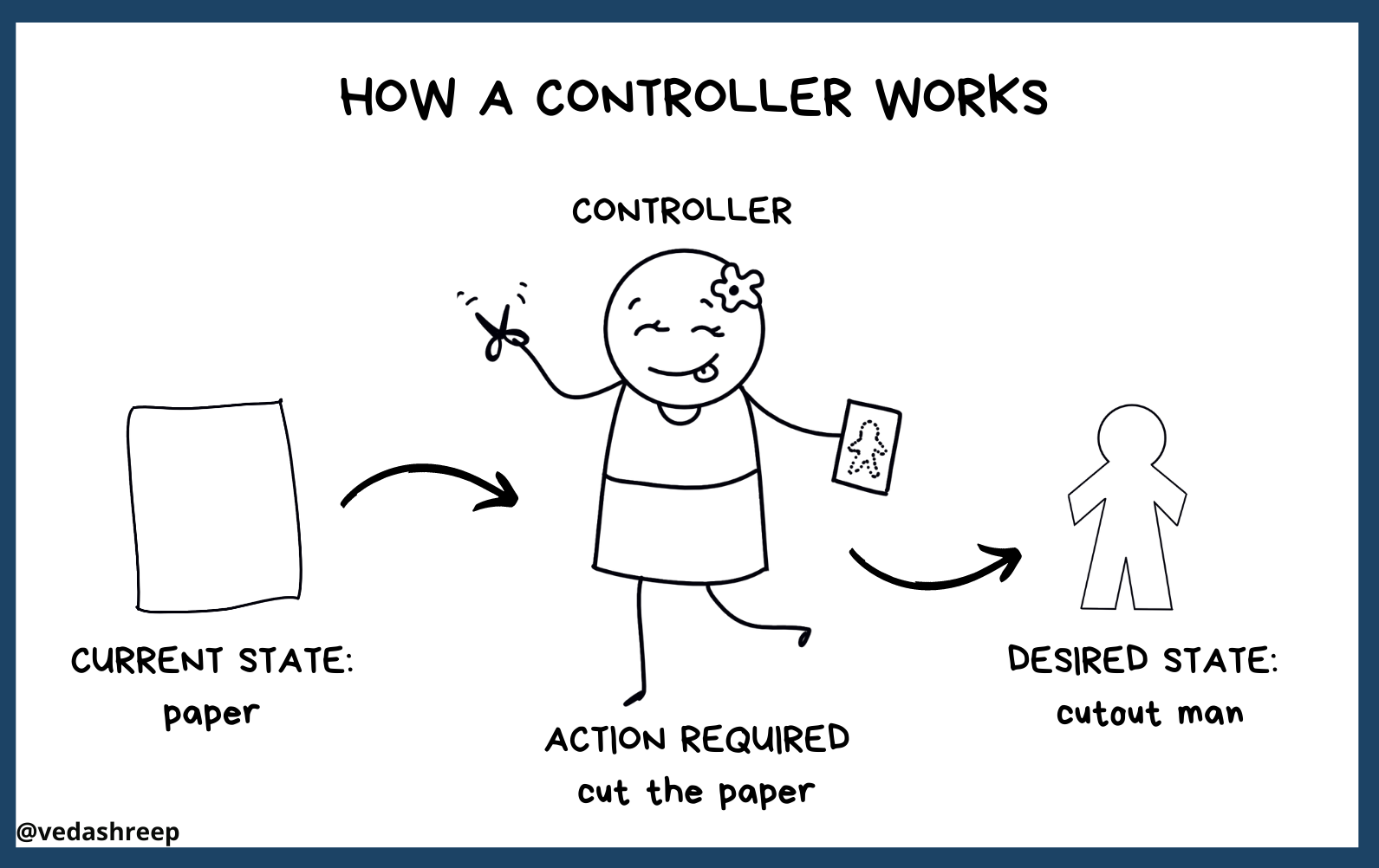

I'd like to call a Controller a "make it right" component. Why? because its job is to make things right. Eh? It's going to watch the cluster. Watch it like a hawk. No rest here. And if something goes wrong, then the controller will take appropriate actions to correct it.

Let me rephrase this. In the cluster, there's a state called the "desired state". It's the state the cluster should be in. A controller will consider this state as the "right" one. Now, at any given time the cluster is in a state called the "current state" and the controller manager will do everything to change the current state to the "desired" state.

It's like you take twenty seconds to run a hundred meters. But you want to reduce it to fifteen. You'll obviously have to run more every day to achieve your goals. That's the current state to desired state transition we're talking about.

In reality, a controller is just an infinite loop that's running and monitoring a resource on the cluster (like a pod maybe). And if anything goes wrong,it'll take some action to fix it.

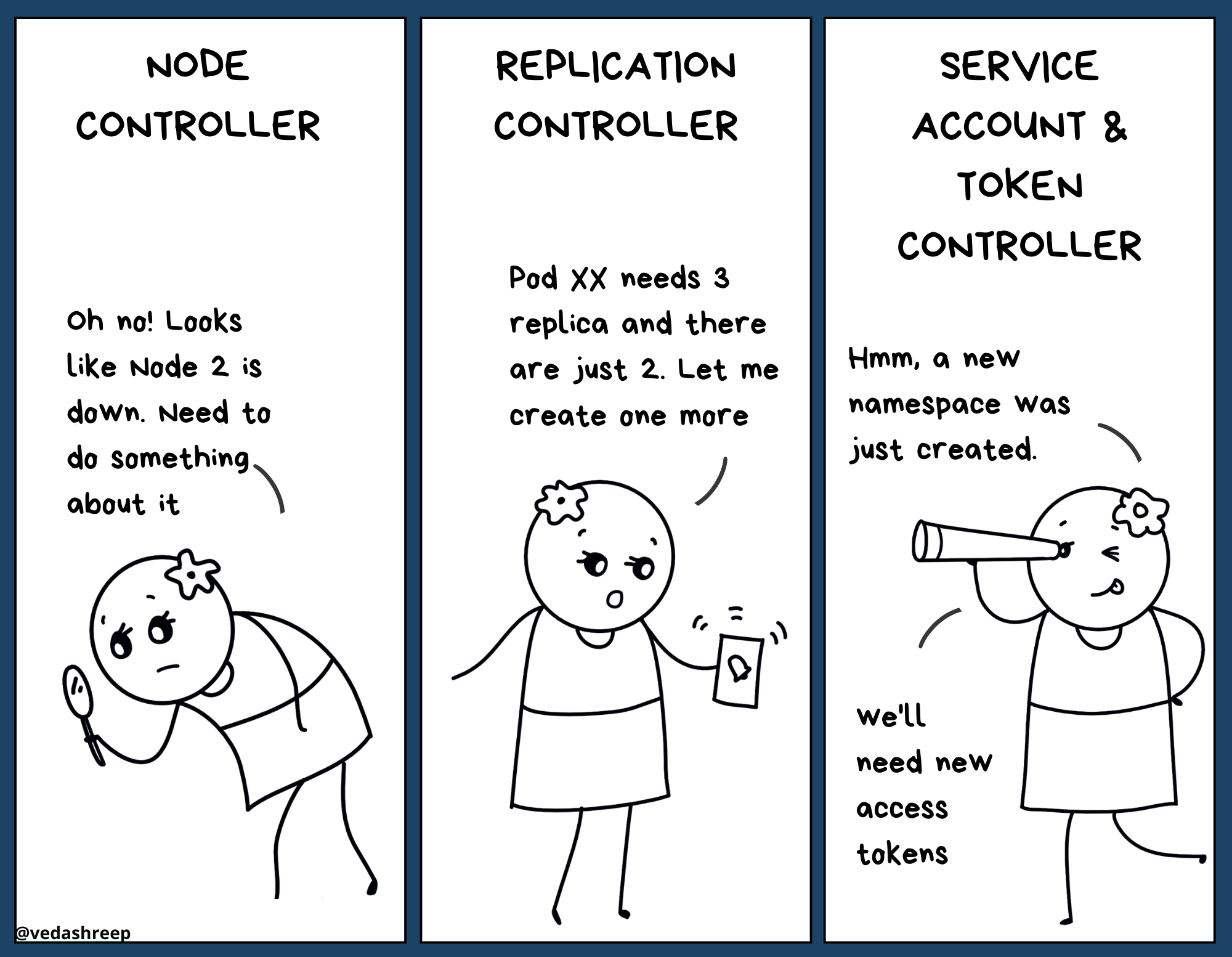

Now coming to the Controller Manager.

You can say the Controller Manager is a collection of various Controllers. So there can be a controller watching nodes, other watching jobs, and so on.

But it's an all-in-one deal. So basically, your controller manager is monitoring all these resources together. It's ONE process doing all of this. But it's multitasking and you feel like there are multiple controllers working at a time. A few common controllers include:

Etcd

Kubernetes' personal journal. That's etcd for you folks! Now tell me, why do we keep personal diaries and journals? Because we want to record our everyday moments! (Because our brains cannot store every possible detail of each day in our lives!)

Same with Kubernetes. Everything happening on the cluster needs to be recorded. Everything! That's why etcd comes into the picture. It's a "key-value " database that acts as backup storage for Kubernetes.

Next up we move over to the worker nodes....

The Node Team

We looked at the master node. But the real work takes place at the worker nodes. And that's because there are a few components on each of these nodes ensuring everything runs smoothly. These include:

- Kubelet

- Kube-Proxy

and also a Container Runtime

Kubelet

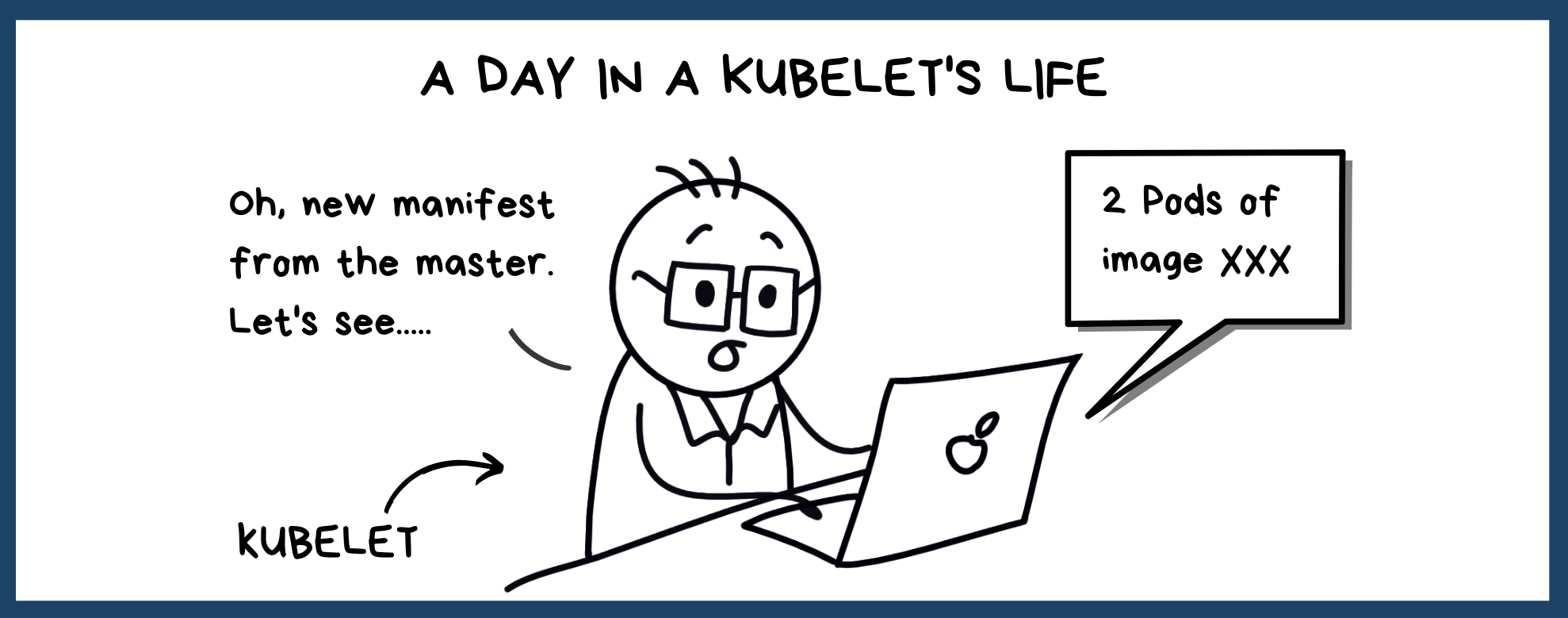

This guy is probably the most important one. The kubelet is an agent. An agent who ensures that everything is working as expected on a node. That includes a number of tasks.

Number one: It's going to communicate with the master node. Usually, the master node will send a command in the form of a manifest or a Podspec which will define what works needs to be carried out and what pods need to be created.

Depending on this the kubelet will communicate with the container runtime on the node. The container runtime will pull the images required and the kubelet will then start and monitor the pods created using this image

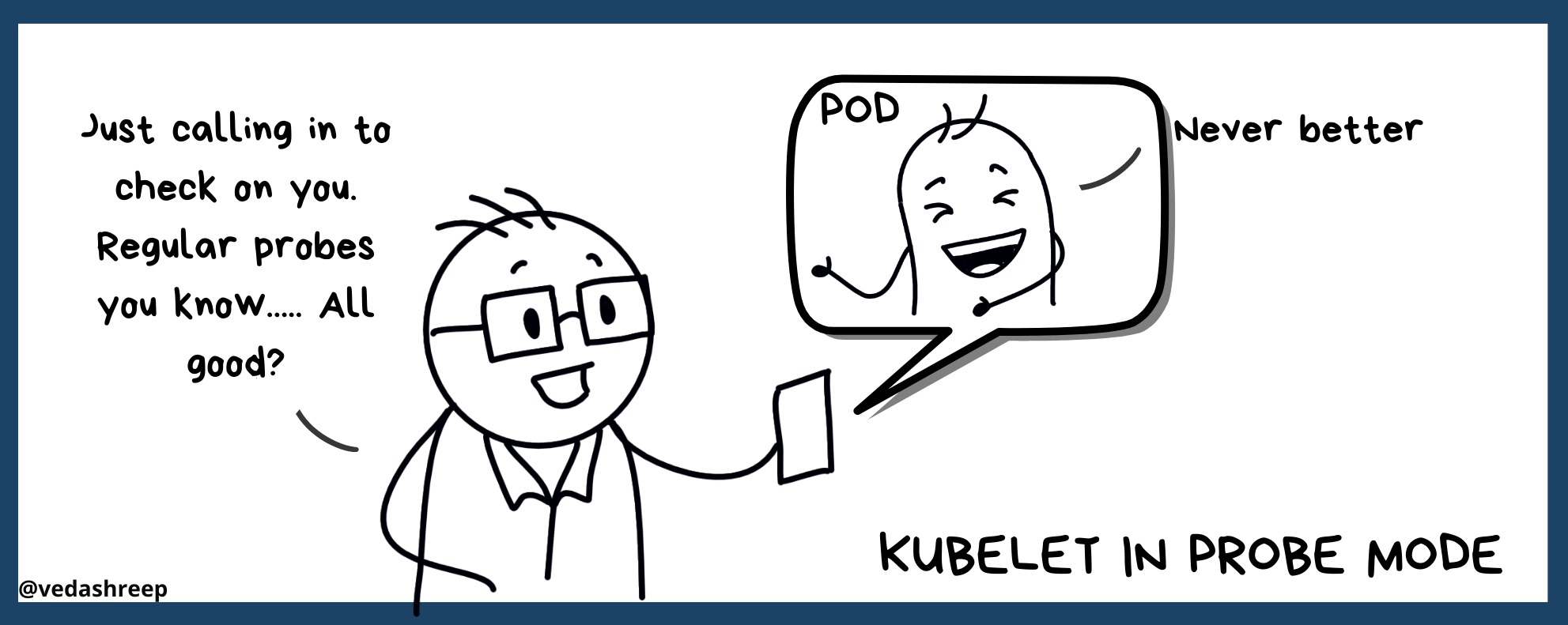

Remember we talk about probes in our last post. Who carries out these probes? The kubelet! Because it's responsible to keep tabs on the pod's overall health!

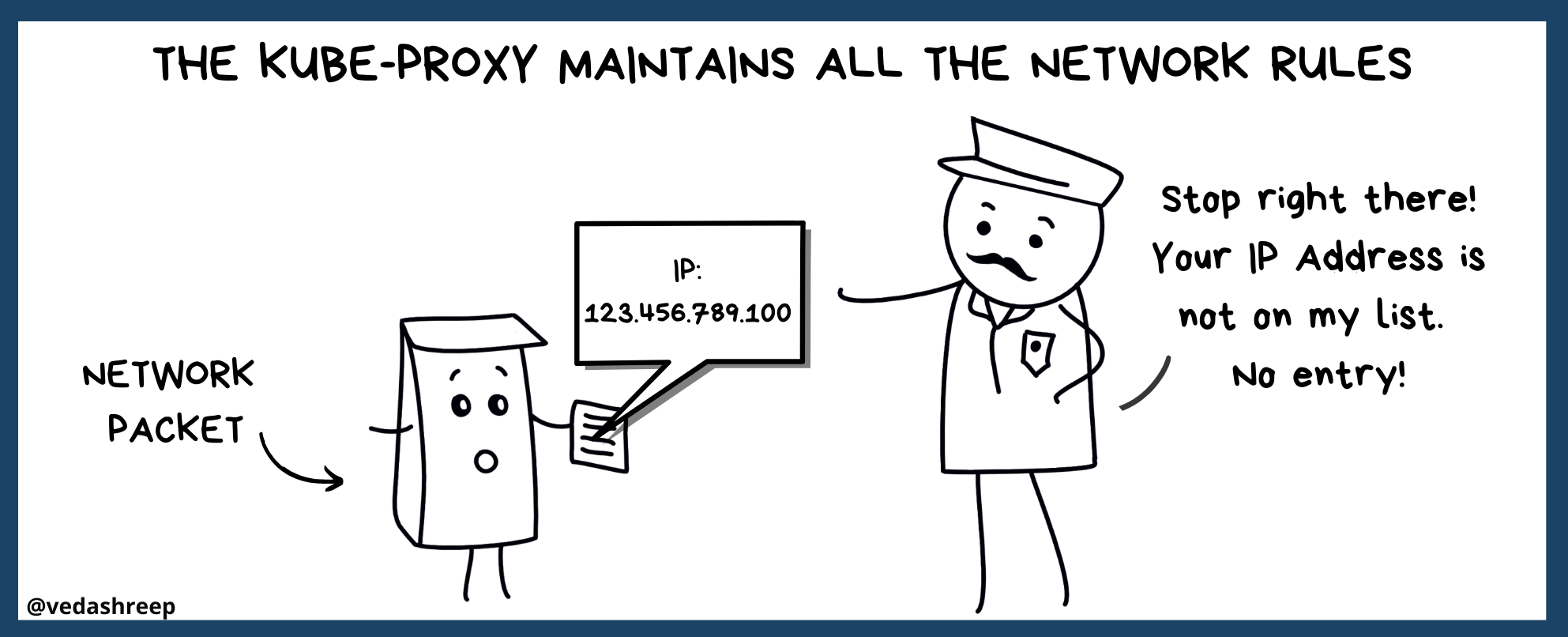

Kube-Proxy

The next big thing required on the node is networking. And the Kube-Proxy is there to handle it. It's sort of a load balancer because it ensures traffic is routed to the appropriate pods and also maintains network rules. You can say the communication aspect across the cluster is totally handled by the Kube-Proxy.

In Summary

We looked at the concept of pods and then we looked at the main components of the a Kubernetes cluster. But we're far from done. we still have a few concepts to go. We haven't talked much about Networking. Then there's workloads in Kubernetes. And also configurations! Don't worry, you'll understand all of this in our upcoming posts in this series. Stay tuned till then!

References

Join the conversation