Startups or companies have started becoming cost efficient. Reducing the cost if servers is an important KPI for any CTO work . Though it is easy to spin servers on AWS with a click of a button it comes at a cost particulary RDS which is a managed service for Relational Databases. In this blog we propose a solution which will reduce the cost of hosting a development database on AWS . Instead of using RDS we can go with installing Postgres on a medium sized AWS compute and serve it from there. This reduces the cost atleast by half .

While doing backend development cost is always a factor that needs to be considered. Ideally, during a development phase, the expectation is to

- Develop code that runs on the developer's local machine

- Run unit tests

- Ensure the same code works everywhere - (Write Once and Run Anywhere)

- Release the code.

While provisioning environments in early-stage startups, we end up creating only development and production environments. For development environments, it is advisable to keep the compute size and the machine configurations to the lowest possible unit to enable the deployment and testing of features with a minimalistic cost.

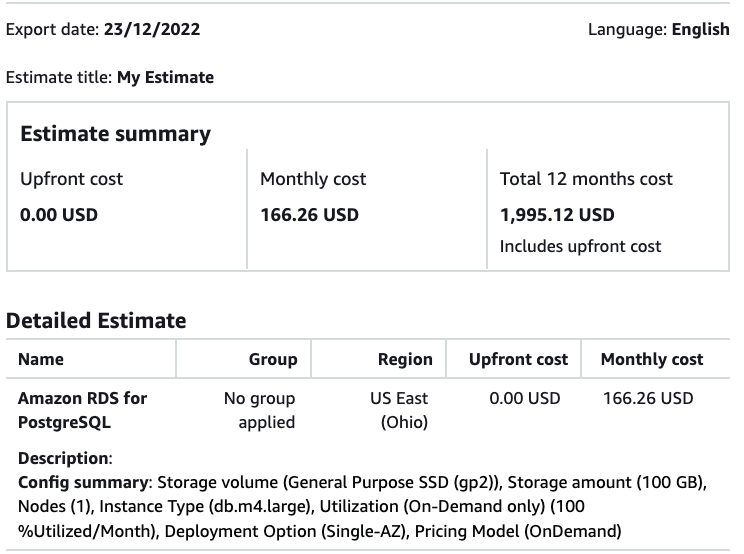

Database is one of those infra components which costs more when it comes to AWS (When you provision RDS). A single AZ RDS instance can cost around 150USD a month

If we add up other components, the cost will go to 250-300 $ a month. Hence to reduce this cost, we try to optimize the database cost in the development environment by spinning an EC2 compute with minimal configuration and having a Postgres installed in it. So the steps will be like the following:

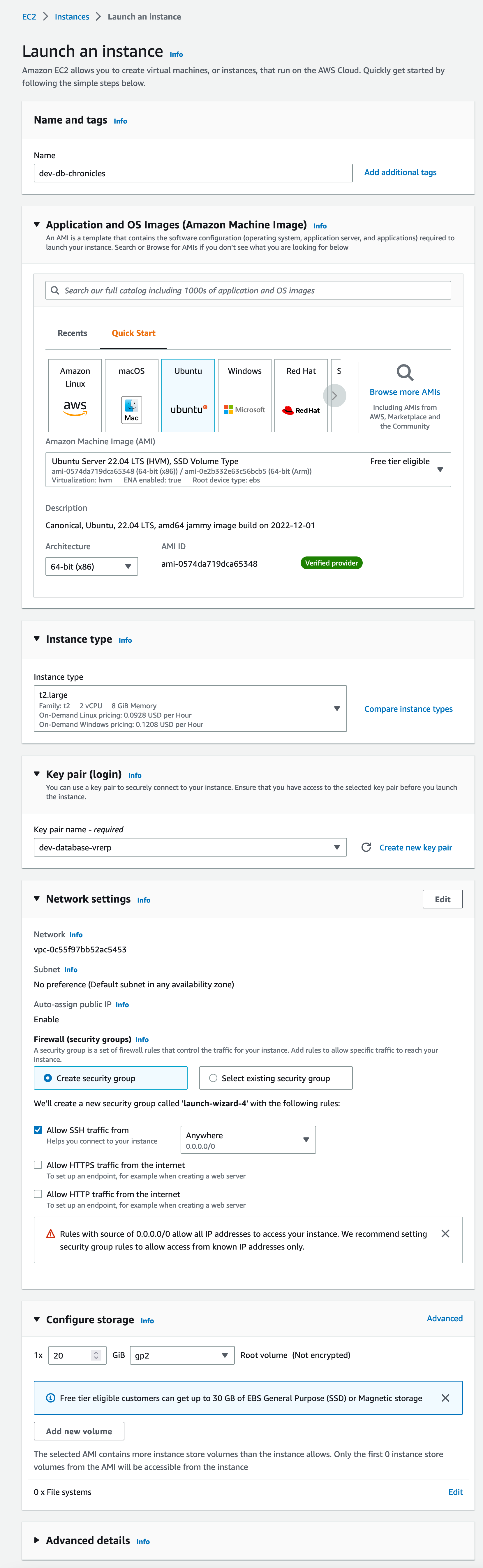

Step 1 - Create an EC2 Instance

Step 2 - Install Docker

apt-get update

apt-get install ca-certificates curl gnupg lsb-release

mkdir -p /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

echo

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

apt-get update

apt-get install docker-ce docker-ce-cli containerd.io docker-compose-plugin

Step 3 - Install postgres

docker pull postgres

Step 4 - Run Docker Image

docker run --name postgresql -e POSTGRES_DB=demo-db -e POSTGRES_USER=demo -e POSTGRES_PASSWORD=demo123 -p 5432:5432 -v /data:/var/lib/postgresql/data -d postgres:latest

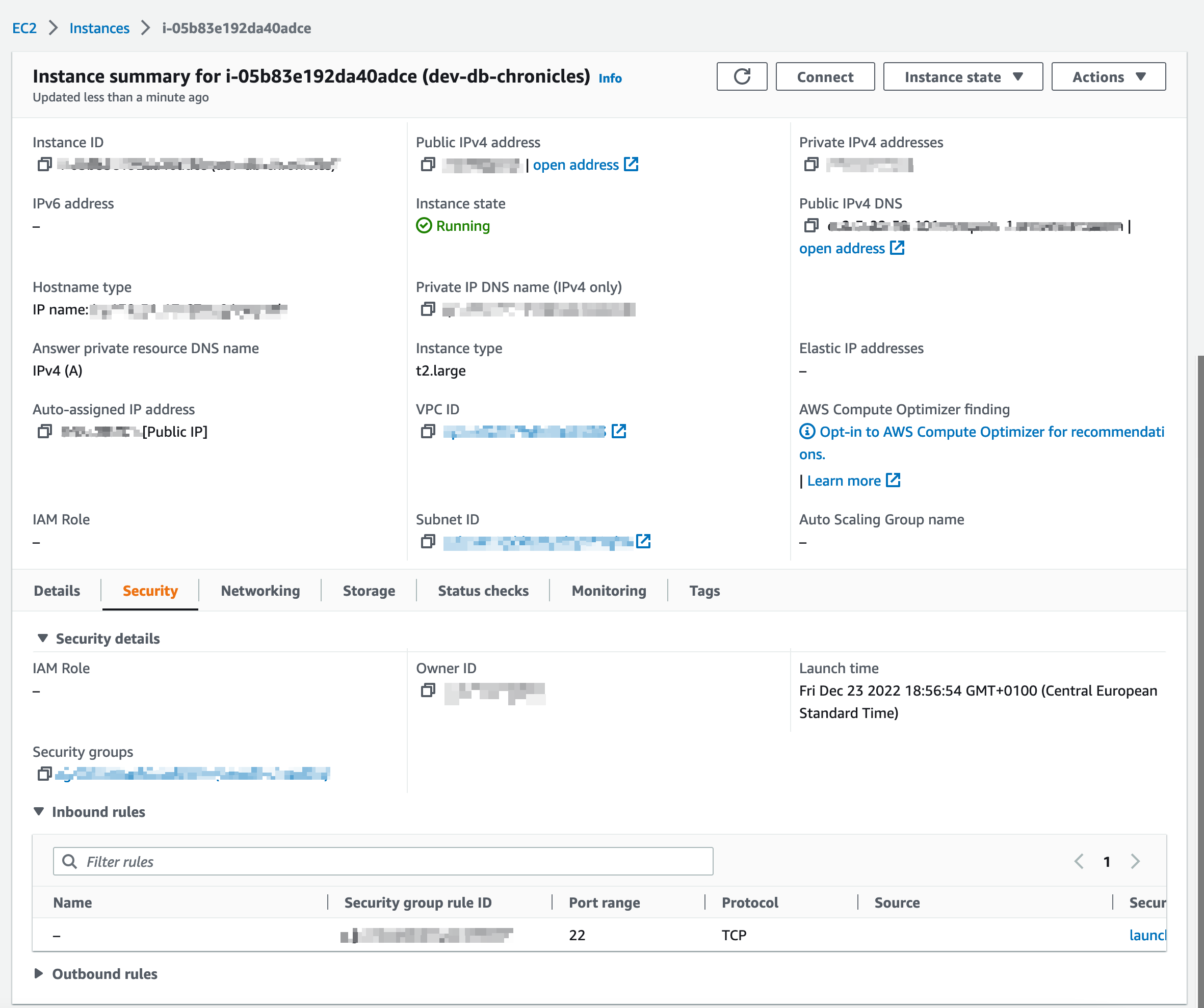

Step 5 - Go the Compute which was created and open the security tab

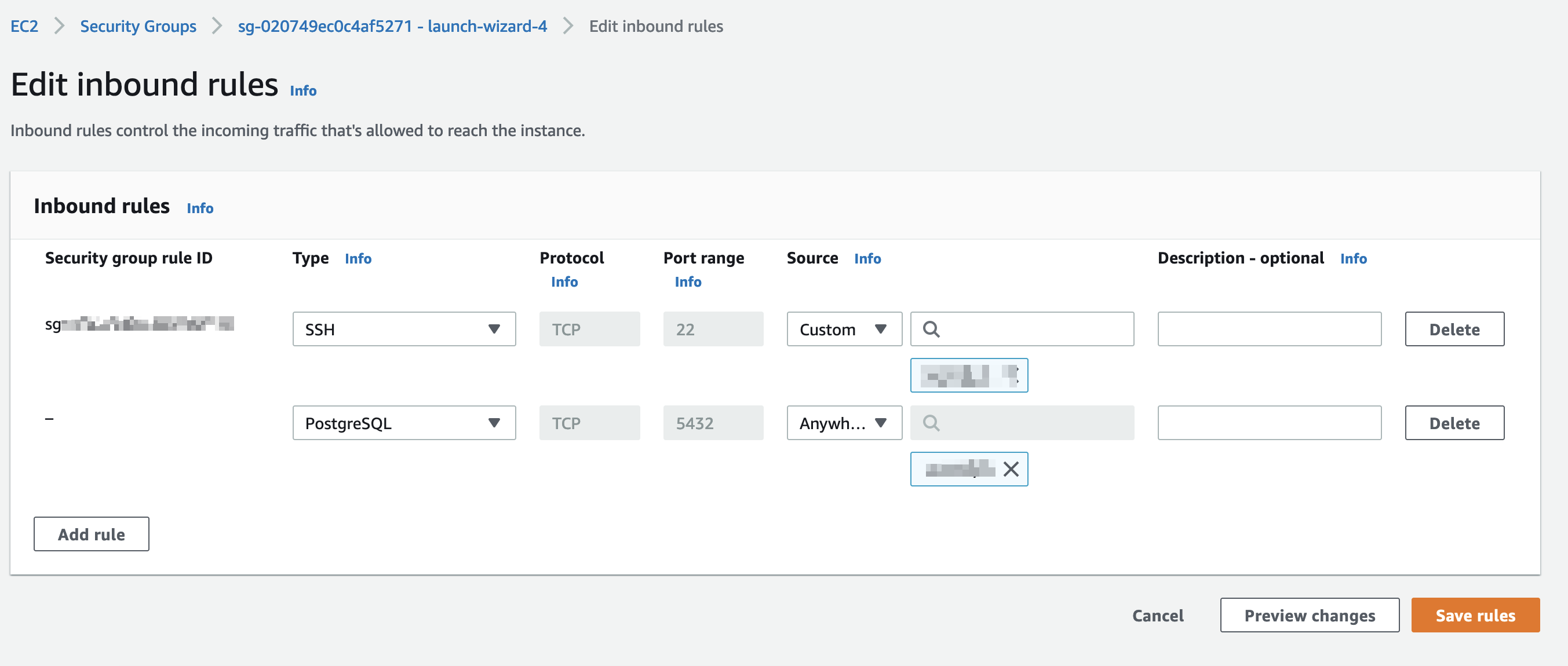

Step 6 - Click on the Security Groups and add a new rule of type PostgreSQL

For the compute to access any incoming IP request add 0.0.0.0/0

Ideally, this should set up the entire database and this should be accessible from any Postgres clients.

Additional Note:

If for some reason the database wasn't created with the specified username password go ahead and create it manually.

Extra Step - Create a new user

sudo -u postgres psql

postgres=# create database demo-db;

postgres=# create user demo with encrypted password 'demo123';

postgres=# grant all privileges on database demo-db to demo;

Conclusion - This would take your database cost to 60-70 USD if you turn it on 24*7 for 30 days. That savings is a huge deal for small startups which can be invested somewhere else. For production , please keep in mind to use a RDS managed service and not get into the headache of managing postgres by yourself.

Join the conversation